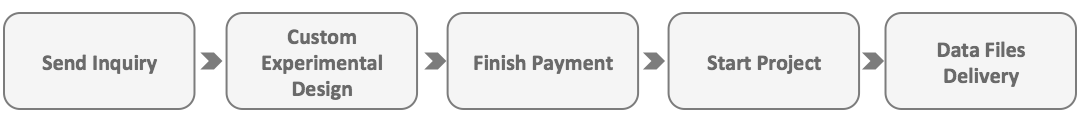

Workflow of Proteomics Data Quality Assessment

Proteomics is a technique used to comprehensively analyze the entire set of proteins in a cell, tissue, or organism. Proteomics datasets often contain substantial amounts of noise, redundancy, and systematic biases. If left unchecked, these issues can lead to incorrect data interpretation. The primary goal of data quality assessment is to filter out inaccurate and low-quality protein identifications and quantifications, ensuring that research findings are based on robust data.

Key Steps in Data Quality Assessment

1. Data Preprocessing

The first step is data preprocessing, which aims to remove technical noise and correct systematic errors introduced during experimentation. Common preprocessing tasks include removing low-quality mass spectrometry peaks, baseline correction, normalization, and filtering out background signals. These steps improve the accuracy and overall quality of the dataset.

2. Data Filtering

Next, data filtering is applied to remove unreliable or irrelevant data. Filtering strategies often include removing proteins with low abundance or confidence scores. By setting appropriate confidence thresholds, low-confidence identifications that may result from noise can be excluded, thereby improving the reliability of the final dataset.

3. Statistical Analysis

Statistical analysis plays a critical role in assessing the variability, reproducibility, and robustness of proteomics data. Various statistical methods, such as hypothesis testing and error modeling, can be used to identify variations within the dataset and assess its reproducibility. Specific quality control metrics, including peak shape, signal-to-noise ratio, and repeatability across replicates, are useful in identifying high-quality data.

4. Data Integration and Validation

After data quality assessment, it is often necessary to integrate proteomics data from multiple experiments or sources. This step involves merging datasets using appropriate bioinformatics tools to create a more comprehensive protein expression profile. External validation methods, such as immunoblotting or ELISA, are crucial to confirming the reliability of the integrated data and ensuring the validity of the research conclusions.

Pros and Cons of the Workflow

1. Advantages

The proteomics data quality assessment workflow offers several significant benefits. First, preprocessing and filtering steps help eliminate noise and low-quality data, resulting in a cleaner and more biologically meaningful dataset. Second, statistical analysis enables researchers to identify variability and systematic biases in their data, improving the accuracy and reliability of subsequent analyses. Finally, integrating and validating data from different sources helps confirm the robustness of the research findings, lending greater credibility to the results.

2. Disadvantages

Despite its advantages, the workflow for proteomics data quality assessment has certain limitations. Preprocessing and filtering steps may unintentionally remove low-abundance proteins that are biologically significant, thus reducing the comprehensiveness of the dataset. Moreover, the accuracy of statistical analysis depends on the models and algorithms used. Different statistical approaches may yield varying results, potentially leading to inconsistencies in data interpretation. Lastly, data integration and validation are often time-consuming and resource-intensive, adding to the overall cost and duration of the research process.

How to order?